If there had to be one topic that was so hard to comprehend after using R, it has to be functions. Everything from writing a function, to learning how to debug a function has just never had some clear instructions on how to do so. In addition, there are tools that have come out that are meant to help with this task, but can seem rather hard to understand how to utilize when solving your problem. This blog intends to get you up and running with what functions are in R, how to utilize them for tasks, and easily debug them inside RStudio to troubleshoot what is exactly going on.

Recently I had the opportunity of going to a Cider Festival, to learn and try new ciders.I’m not the biggest fan of cider’s, so naturally I opted to try almost every beer that was available, and wanted to compare what I tasted. If anyone were to ask about my taste buds, it would be that I love shandy’s, and wheat beer. None of the beer’s I tried were even remotely close to my liking, yet somehow tasted much better than I anticipated. It sure would be helpful to have a way to compare the beer’s I’ve tried to see just why I enjoyed an American Pale ale, and even a cask. Using rvest, we can easily scrape the necessary data about each beer from RateBeer to help compare them against each other to determine what similarities they may share.

But First….some business that we need to take care of

Before we get started, a few ground rules need to be established so that you get yourself on the right track for the task at hand. Interacting with the web via programming can very easily feel like uncharted terriority at first, almost like drinking your first beer: Where am I, and how did I end up here?But, fear not, as this blog will provide you the resources to not get lost navigating this abyss.

Tool Time:

In order to gain the most value from what is outlined within this blog, you will need the following tools:

- RStudio

- Visual Studio Code

- GitKraken

Why do we need these you might ask? Equipping yourself with the right tools can sometimes be half the battle with r: it’s hard to figure out how to understand the problem at hand if you don’t have the necessary tools to solve the problem. In addition to these tools, I would strongly encourage you to think about using a sandbox environment, using Docker or RStudio Cloud, as R has had some pain points with encodings on different operating systems. If you would like more information on how to create your own custom sandbox environment for R, you can check out this blog for more information, or check out RStudio Cloud.

https://medium.com/@peterjgensler/creating-sandbox-environments-for-r-with-docker-def54e3491a3

At a high level, there are two generic ways of obtaining data on the web:

- API’s — API is called Application Programming Interface

- Web Scraping- more of a brute-force approach

Ok, so what exactly is an API, then? Should I be using it?

“An API is a messenger that takes requests, and then returns a response back to you”

API’s are setup so you can easily interact with services. Let’s say I want to pull data on Fires in Minneapolis.

If I wanted, I could easily pull this data via data.world’s API:

Web scraping, on the other hand is a much more brute-force way of obtaining data on the web. Not all sites have API’s to use, which means that it may be necessary to scrape to find. How would we go about checking if we can scrape a webpage? Simple, check the robots.txt file. Check out this video for more information on what a robots.txt file is used for:

For our case, we are using the site RateBeer, so let’s look at their file to see if there are restrictions:

https://www.ratebeer.com/robots.txt

User-agent: Mediapartners-Google Disallow: User-agent: * Allow: /

Looking at RateBeer’s robots.txt file, we can see that they allow scraping, as they disallow nothing, so we are OK to proceed.

Grab a sandwich, get some coffee, and go watch this webinar from RStudio listed below. RStudio has two great webinars that cover API’s, and web-scraping in depth, we want part 2:

The slides for the webinar can be found here:

https://github.com/rstudio/webinars/blob/master/32-Web-Scraping/02-Web-Scraping.pdf

After I watched the webinar listed above, I was so lost on all these topics, and rightfully so. Learning about the web technologies used to engineer websites is not a small task, and can be very hard when first getting started. If you want further resources to look to, I would strongly encourage you to view these slides from useR 2016 which are very similar, and do a good job of the getting the lay of the land:

https://github.com/ropensci/user2016-tutorial/blob/master/03-scraping-data-without-an-api.pdf

Most packages developed for web scraping with R are meant for scraping either HTML or CSS parts of a webpage, not Javascript content, which is rendered in the browser. Javascript is much more complex to scrape, and can be done with RSelenium, but is not for the faint of heart:

ropensci/RSelenium

RSelenium - An R client for Selenium Remote WebDrivergithub.com

Understanding and Laying the foundation

To get our data to work with, we first need to set up some functions to acquire our variables for each beer. Now, I have only picked three beers to keep this simple, but I hope this example illustrates how powerful a function can be when put to use. Lets take a look:

In order to compare each beer, we first need to find a way to get attributes about each beer. Ratebeer provides us with basic statistics about each beer:

Pollyanna Eleanor Beer Descriptive statistics

As you can see above, we are interested in taking the descriptive statistics about each beer, but how would we go about finding what exactly links to that element? As there is no JavaScript on these webpages about each Beer, we can simply utilize tools to find the respective CSS or Xpath to the element we are interested in. The easiest way of going about this is

Now, in order to pull the data from the website, we first need to download the webpage, and then attempt to select the element we are interested in via a CSS tag or an xpath to the element we are interested in.Unquestionably, this can be an excruciating process for two reasons:

- CSS tags should(but do not) work with rvest due to selectr having some issues(which drives finding the CSS tags): https://github.com/sjp/selectr/issues/7#issuecomment-344230855

- Or, you have no idea if the path selected was the correct path for the element you want, which is more common than I’d like to admit

Now, at first, this seems like an almost impossible task, but fear not. We can still use an xpath to select what we are interested in extracting for further use.

Two tools are very useful for finding the correct xpath for the element that you wish to pull: Selectorgadget and the chrome developer tools.

Selector Gadget

Selectorgadget is a point-and-click CSS selector, specifically for Chrome. Simply install the chrome extension, and then click on the elements you are interested it. This will select all elements that are related to that object.Next, select anything in yellow you do not want. There you go!

Using SelectorGadget

More information on SelectorGadget can be found here:

You can also find a well detailed walkthrough here if you need an additional example:

Chrome Developer Tools

If you have issues getting the correct xpath from SelectorGadget, you can also try using the Chrome developer tools, which are very user friendly.

Simply click on View-Developer Tools, which will load up the developer tools.

Next, click on the little mouse button to interact with the webpage. This will enable your cursor to show you what code is driving each element on the page.

In the below screenshot, we can see that what we select with our mouse, and the respective code which is driving that particular element.

Chrome Developer Tools selecting element on page

Click on the blue area above, as indicated above. In this case, we are interested in the stats container, as it contains the metrics that we want to compare. This will lock you on that element so your cursor does not try to select HTML or CSS for other elements. Now right click on the area in the html code highlighted(red box), and then select copy->Copy XPath. This will allow you to get down to the most specific path for where that particular element lives on the page.

Perfect! Now that we have the xpath for the element we can begin to start writing our function to extract data from the xpath.

Setting up functions

Let’s recap up to this point what we have accomplished. Up to this point, we first identified what exactly we wanted to accomplish: scrape basic statistics about each beer. We then found the necessary xpath, which identifies the element on the webpage we are interested in. Using rvest, we can write a script which allows us to

Outlined in the slides from RStudio(links at the beginning of the talk), there are three “core activities” that we need to accomplish for a given webpage in our function. Thinking about it, they make sense:

- Pull down a webpage

- Identify elements we want

- Extract & pull the element out

- Tidy up the element to make it useable

There are three functions from the rvest package which allow us to perform these steps with ease. However, as we want to perform these steps on multiple URL’s we can set up our function like so:

library(magrittr) #for pipes library(dplyr) #for pull function library(rvest) #get html nodes library(xml2) #pull html data library(selectr) #for xpath element library(tibble) library(purrr) #for map functions library(datapasta) #for recreating tibble's with ease

#Sample Data

sample_data <- tibble::tibble(

name = c("pollyanna-eleanor-with-vanilla-beans","brickstone-apa","penrose-taproom-ipa","revolution-rev-pils"),

link = c("https://www.ratebeer.com/beer/pollyanna-eleanor-with-vanilla-beans/390639/",

"https://www.ratebeer.com/beer/brickstone-apa/99472/",

"https://www.ratebeer.com/beer/penrose-taproom-ipa/361258/",

"https://www.ratebeer.com/beer/revolution-rev-pils/360716/"

)

)#the function:

get_beer_stats1 <- function(x){

read_html(x) %>%

html_nodes(xpath = '//*[@id="container"]/div[2]/div[2]/div[2]') %>%

html_text()

}We could also write our function to be more explicit on when values change, making it easier to step through and debug in RStudio:

get_beer_stats2 <- function(x){

url <- read_html(x)

html_doc <- html_nodes(url, xpath = '//*[@id="container"]/div[2]/div[2]/div[2]')

stats <- html_text(html_doc)

return(stats)

}Hold up, I thought we were supposed to make sure every action is precisely logged so them computer understands it? Don’t we need some sort of for-loop, that acts as a counter to increase after each URL? Why didn’t we use the dplyr::pull command to literally pull the vector out of the dataframe to iterate on first?

One of the issues of working with purrr is understand how to think about the problem you are facing in the right mindset. Thinking about the problem, we can approach it from the following angles:

- I have a dataframe of URL’s, and I want to iterate over each row(or URL), and perform a given set of actions for each URL

- I have a dataframe with a column of URL’s and for each URL, I want to operate on each URL

- Treating my column of URL’s in my dataframe as a vector, I want to operate on each element in the vector

You see, purr’s main workhorse function map, is designed to iterate over objects which contain a bunch of elements, which then allow you as the user to focus on writing a function that does some action. In R, most times these objects are either lists, list-columns, or simply a vector. In doing this, it allows you to have a workflow as such:

- Determine what you would like to do for a given element

- Turn that “recipie” into a function

- Apply the recipe with purr over the object (and if necessary, create a new column to store the results in)

Ok, now let’s apply the function we just created to our data, and see if it works:

sample_data_rev <- sample_data %>% mutate(., beer_stats = map_chr(.x = link, .f = get_beer_stats1))

Before we move on, lets break down what is exactly happening. First, we pipe in the dataframe, and say we want to add a new column with mutate. Next, we create a new column defined as a character column using map_chr (or vector), and then apply our custom function.

Getting the data & Debugging in RStudio

Now that our core function is defined, we can use RStudio to walk through our function if we wanted to see how values are changing. Simply enable debug mode by going to:

Debug->On Error-> Error Inspector

Next, simply wrap your function with debug like:

debug(

get_beer_stats1 <- function(x){

read_html(x) %>%

html_nodes(xpath = '//*[@id="container"]/div[2]/div[2]/div[2]') %>%

html_text()

}

)When you run your code that calls your function, you will enter into debug mode so you can view how values change in the code:

sample_data_rev <- sample_data %>% mutate(., beer_stats = map_chr(.x = link, .f = get_beer_stats1))

Expanding on our example, lets say that we have URL’s that may cause some hiccups. How would we make an function which could hand these errors with ease?

purr equips us with error handling functions to wrap our function in to gracefully handle errors. We can add error handling using the possibly function:

sample_data_rev <- sample_data %>% mutate(., beer_stats = map_chr(.x = link, possibly(get_beer_stats1, otherwise= "NULL")))

Possibly is very similar to a try-catch, which allows us to wrap our created function with a different function for error handling. This can be a bit confusing at first glance, but makes sense once you have it setup properly.

Encoding and R: Entering the Abyss

Before we move further, it may be worth noting that the following is what I will call “the Abyss”, or Mordor. Beware that this is not for the faint of heart, so if you feel that you are ready, then proceed. Otherwise, go make a sandwich, and take a nap.

Mordor, aka how Encoding feels in R

For me, encoding in R has really reminded me of Mordor from Lord of the Rings. It just seems like some never ending pit that is almost near-death. Not to worry though, you are in good hands. Let’s go.

So we have our data, and it should be all set for cleanup, right? Nope. Why is this the case? Earlier we mentioned that our workflow consisted of the following steps:

- Pull down a webpage

- Identify elements we want

- Extract & pull the element out

- Tidy up the element to make it useable

Part of the issue with this workflow is that it assumes once we pull out the data from the webpage, it should be ‘ready to go, right off the boat’, which unfortunately, is not the case with data from the web. You see, data from the web has to be encoded, and finding or even detecting encoding issues can be a real hassle, as you may not find out until much further downstream in your analysis process, like I did.

Before we step in any further, I would encourage you to first read the following articles. This will help lay the foundation for understanding what exactly is wrong with our text data, and strategies to help detect the culprit:

Character encodings for beginners

What is a character encoding, and why should I care?www.w3.org

To re-iterate, our computer (or machine, really), stores data in terms of bytes. These bytes are then encoded into different locales, such as UTF-8, or ANSI. Depending on how you have your platform(or machine’s) native encoding set up, R can cause you much grief with this. As a rule of thumb, it is always best to try to interface with UTF-8 whenever possible, as it causes the least pain points, regardless of what your platform’s native encoding may be. Finding out where the source of encoding issues arise can be a challenge, so the following tools will help:

- textclean package — used to detect issues with encoded text

- rvest — good for attempting to guess the encoding if you are unsure

- datapasta — good for easily recreating a tibble

- stringi- brute force way to view if the unicode matches what we are looking at, and clean it

- base::charToRaw — to view raw bytes of the string

- tools::showNonASCII and iconv to show non-ASCII chars

- Unicode inspector - https://apps.timwhitlock.info/unicode/inspect

- Unicode Table - http://www.utf8-chartable.de/

Our general workflow will be as such:

- Detect or identify issues with the text

- attempt to fix encoding

Before we move on, I would strongly encourage you to make sure you can view whitespace in RStudio via Tools-Global Options-Code, and show whitespace characters.

Configuring whitespace characters in RStudio

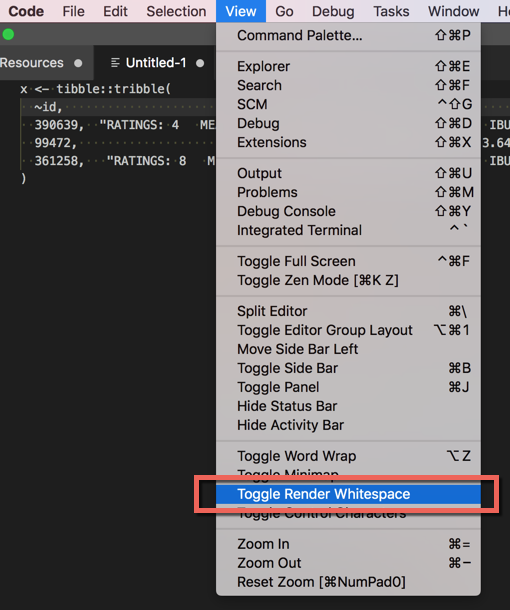

Also, go into Visual Studio Code, and do the same by going to View- Toggle Render Whitespace as well.

Toggle Render Whitespace in Visual Studio Code

Lets take a small bit of code to use for an example. Suppose you get this data:

bad_data <- tibble::tribble( ~id, ~value, 390639, “RATINGS: 4 MEAN: 3.83/5.0 WEIGHTED AVG: 3.39/5 IBU: 35 EST. CALORIES: 204 ABV: 6.8%”, 99472, “RATINGS: 89 WEIGHTED AVG: 3.64/5 EST. CALORIES: 188 ABV: 6.25%”, 361258, “RATINGS: 8 MEAN: 3.7/5.0 WEIGHTED AVG: 3.45/5 IBU: 85 EST. CALORIES: 213 ABV: 7.1%” )

> Encoding(bad_data$value) [1] "UTF-8" "UTF-8" "UTF-8"

How would we detect if there is an issue with it? We can see from above that the data is encoded as UTF-8, so we should be fine then……right? Wasn’t that what the webpage told us it was encoded in? Yes, but looking deeper into the data, there appears to be some characters that diden’t get converted over correctly. So how exactly do we ‘correct’ a bad UTF-8 file?

The First Ring of Fire: Detecting the problem

Well, for starters, we could try re-create the dataframe using datapasta as below, and hope that we can see something

datapasta::tribble_paste(bad_data)

Lucky for us, when we do this, we can see that there is something that seems funny:

Odd red spaces in our data?

Ok, so there’s some odd red spaces inside our data…what does that even mean RStudio?If we paste the output into a Visual Studio Code, we can see something a bit pecuiliar:

After looking at the above screenshot, something seems to be a bit off. Why is there no space where the blue arrows are? Seems a bit odd. Say we wanted to look at not just 3 beers, but hundreds, maybe thousands of beers, could this work? Sure, but there has to be a better method, and there is:

#Truncated Output textclean::check_text(bad_data$value) ========= NON ASCII ========= The following observations were non ascii: 1, 2, 3 The following text is non ascii: 1: RATINGS: 4 MEAN: 3.83/5.0 WEIGHTED AVG: 3.39/5 IBU: 35 EST. CALORIES: 204 ABV: 6.8% 2: RATINGS: 89 WEIGHTED AVG: 3.64/5 EST. CALORIES: 188 ABV: 6.25% 3: RATINGS: 8 MEAN: 3.7/5.0 WEIGHTED AVG: 3.45/5 IBU: 85 EST. CALORIES: 213 ABV: 7.1% *Suggestion: Consider running `replace_non_ascii`

The textclean package is a derivative of the qdap package, which is designed to work with text data, but requires rJava to work properly. textclean is a port from the qdap ecosystem, and much lighter, thus allowing us to use it to detect issues with our text. As we can see above, there are plenty of errors, but this allows us to verify that non-ascii characters exist within our text, and hence causes us headaches if we don’t take care of them now.

Now, if you are like me, attempting to get a grip on what exactly is going on in the string can be a bit of a challenge: how do we know where in the string these issues occur? Fortunately for us, base-r provides some excellent tools to help detect this:

> iconv(bad_data$value[[2]], to = "ASCII", sub = "byte") [1] "RATINGS: 89<c2><a0><c2><a0> WEIGHTED AVG: 3.64/5<c2><a0><c2><a0> EST. CALORIES: 188<c2><a0><c2><a0> ABV: 6.25%" > tools::showNonASCII(x$value[[2]]) 1: RATINGS: 89<c2><a0><c2><a0> WEIGHTED AVG: 3.64/5<c2><a0><c2><a0> EST. CALORIES: 188<c2><a0><c2><a0> ABV: 6.25%

The tools package and iconv in R both allow us to see that sure enough, there appears to be some odd characters indicated by the <>.

If you want to get a better feel for what exactly these characters are, we can plug the raw output from datapasta’s tribble_paste into Tim Whitlock’s Unicode Inspector, and we can see that sure enough, we have a character called “No Break Space”

No Break Space

and the respective UTF-16 code. To verify, we can plug in that code with the prefix \u, and the code from above, and sure enough:

str_detect(bad_data$value[[2]], “\u00A0”)

The Second Ring of Fire: Fixing UTF-8

It works! Now we need to figure out how to repair the string.To repair the string, we could go about fixing it via rvest, textclean, or stringi:

bad_data <- tibble::tribble( ~id, ~value, 390639, “RATINGS: 4 MEAN: 3.83/5.0 WEIGHTED AVG: 3.39/5 IBU: 35 EST. CALORIES: 204 ABV: 6.8%”, 99472, “RATINGS: 89 WEIGHTED AVG: 3.64/5 EST. CALORIES: 188 ABV: 6.25%”, 361258, “RATINGS: 8 MEAN: 3.7/5.0 WEIGHTED AVG: 3.45/5 IBU: 85 EST. CALORIES: 213 ABV: 7.1%” ) #' for reference #' https://stackoverflow.com/questions/29265172/print-unicode-character-string-in-r #' stringi also uses mostly UTF-8, which is very comforting to know #'https://jangorecki.gitlab.io/data.table/library/stringi/html/stringi-encoding.html str_detect(x$value, "\u00A0") ex1 <- textclean::replace_non_ascii(bad_data$value) ex2 <- rvest::repair_encoding(bad_data$value)

textclean will eliminate the values it detects, while rvest will try to preserve the value. While rvest can (and does offer this capability), it doesn’t do the best job of cleaning the text data reliably. Instead, stringi offers us the function str_trans_general, which will allow us to keep our three spaces between each characters intact. This will allow us to use those spaces later on as delimiters to clean the data even further.

bad_data$value <- stringi::stri_trans_general(bad_data$value, “latin-ascii”)

Scaling up: Encoding with large-er amounts of data

Now, this sounds awesome, but maybe you are like me, and find a massive amount of beer data (say 2GB), and find that there may be some encoding issues…..we could try and run a function to clean the data after import, but that could take quite a bit of time. Is there a better way of dealing with this mess? Yes, there is. Enter iconv:

iconv is a GNU command-line utility that helps with force converting data into it’s correct form, while still trying to retain as much data as possible.

Let’s use data from here as an example:

Now, at first glance the data may seem a bit messy, but let’s focus on trying to convert the data into UTF-8. First, lets use gunzip from r to unzip the file to a directory.

#Gunzip Ratebeer gunzip(filename = “~/petergensler/Desktop/Ratebeer.txt.gz”, destname = “~/petergensler/Desktop/Ratebeer.txt”, remove= FALSE)

The ~ in this command simply means relative to your Home directory on your machine. Simply type cd inside a terminal, and you will be take to your home directory.

Next, we can use bash to determine how many lines are in this file via wc -l, and we can also see what bash thinks the encoding is with file -I:

wc -l Ratebeer.txt

22212596 Ratebeer.txt

file -I Ratebeer.txt

Ratebeer.txt: text/plain; charset=utf-8

Ok, so this looks to appear ok, but with 22 million lines, this has to be a hassle to fix. Not quite iconv makes this process a breeze:

Approach 1: iconv −f iso−8859−1 −t UTF−8 Ratebeer.txt > RateBeer-iconv.txt

Approach 2: iconv -c -t UTF-8 Ratebeer.txt > Ratebeer-iconv.txt

With approach one, we try to take the file in what we think the file should be, and specify that we want it to be UTF-8, and try to overwrite it. Approach two is a much more brute-force approach, as we simply tell iconv that we want to convert to UTF-8, and create a new file. Woila! We can now read the file in as raw lines if needed, and no hiccups, all thanks to iconv.

One thing to note is that while R does have an iconv function, I have found the command line utility to be much more versatile for my needs, and you can simply put a bash chunk in RMarkdown notebook. Use the command line.

Part 5: Cleaning up the data

Now that we have obtained our data, and cleaned the encoding, let’s see if we can try to get the basic statistics into their respective columns. At first glance, this seems simple, as we just need to split the statistics column on a delimiter, and life should be good. But of course one of the beers I have does not have the same amount of elements as the other’s thus wreaking havoc at first sight (as if encoding was already challenging enough).

Just to recap, our data looks like this:

bad_data <- tibble::tribble( ~name, ~link, ~beer_stats, "pollyanna-eleanor-with-vanilla-beans", "https://www.ratebeer.com/beer/pollyanna-eleanor-with-vanilla-beans/390639/", "RATINGS: 4 MEAN: 3.83/5.0 WEIGHTED AVG: 3.39/5 IBU: 35 EST. CALORIES: 204 ABV: 6.8%", "brickstone-apa", "https://www.ratebeer.com/beer/brickstone-apa/99472/", "RATINGS: 89 WEIGHTED AVG: 3.64/5 EST. CALORIES: 188 ABV: 6.25%", "penrose-taproom-ipa", "https://www.ratebeer.com/beer/penrose-taproom-ipa/361258/", "RATINGS: 8 MEAN: 3.7/5.0 WEIGHTED AVG: 3.45/5 IBU: 85 EST. CALORIES: 213 ABV: 7.1%", "revolution-rev-pils", "https://www.ratebeer.com/beer/revolution-rev-pils/360716/", "RATINGS: 34 MEAN: 3.47/5.0 WEIGHTED AVG: 3.42/5 IBU: 50 EST. CALORIES: 150

Part of the key to understanding the task at hand is twofold- we want to split the data into each column but using the : to keep the key-value pair relationship.

final_output <- bad_data %>%

# create a new list column, str_split returns a list

mutate(split = str_split(string, " ")) %>%

# then unnest the column before further data prep

unnest() %>%

# you can now separate in a fixed 2 length vector

separate(split, c("type", "valeur"), ": ") %>%

# then get the result in column with NA in cells where you did not have value in string

spread(type, valeur) %>%

rename_all(str_trim) %>%

select(-string, -link)

#> # A tibble: 3 x 6

#> ABV `EST. CALORIES` IBU MEAN `WEIGHTED AVG` RATINGS

#> * <chr> <chr> <chr> <chr> <chr> <chr>

#> 1 6.8% 204 35 3.83/5.0 3.39/5 4

#> 2 7.1% 213 85 3.7/5.0 3.45/5 8

#> 3 6.25% 188 <NA> <NA> 3.64/5 89Understand that when I first ran this script, the very first line failed right out the gate — — due to encoding issues, but I never got an error message until actually trying to use the spread function.

At first, this can seem a bit convoluted, especially if you are unfamiliar with the list-column.When I first looked at this code, it seemed like the str_split just had a really odd behavior, and was almost an unnecessary burden on the code. Unnesting the list-column almost creates a cartesian-like join which takes each record, and then makes it so that each row has every possible combination of every value, thus making it possible to spread, and spread dynamically.

Now our table looks like this:

> head(final_output) # A tibble: 4 x 7 name ABV `EST. CALORIES` IBU MEAN RATINGS `WEIGHTED AVG` <chr> <chr> <chr> <chr> <chr> <chr> <chr> 1 brickstone-apa 6.25% 188 <NA> <NA> 89 3.64/5 2 penrose-taproom-ipa 7.1% 213 85 3.7/5.0 8 3.45/5 3 pollyanna-eleanor-with-vanilla-beans 6.8% 204 35 3.83/5.0 4 3.39/5 4 revolution-rev-pils 5% 150 50 3.47/5.0 34 3.42/5

From this, it’s interesting to note that the beer with the most amount of ratings does not have an average review, but the Pollyanna seems to have the highest score.

Closing Thoughts

As you finish up reading this article, I would encourage you to try and take some beers (or beverages of your choice), and try to collect data on them. What do you notice? Is there a correlation between ABV(how strong the drink can be) and the beer’s average review score? I hope this tutorial has been able to help you gain insights into how to use R to your advantage when trying to solve problems, and that you have learned more about encoding in R, and tools to help when faced with these challenges. I’ve outlined some of my thoughts below as I’ve been reflecting on how this data has challenged me in so many ways, and not just with R, but in my personal workflow in general.

Encoding

As I have been working with R over the past few days, one of the things that I’ve continued to notice is that when R fails, it can be hard to explicitly tell when it failed and why.As a novice coming into R, I think this makes it incredibly challenging to diagnose what tool could be failing, as R does not fail fast, and fail hard. With the encoding of our strings:

- Did reading the html fail?

- Was it just tidyr that had issues with UTF-8, or this a deeper issue?

- If base r has so many encoding issues, is this a “firm foundation” for any project, work related or not?

Going through the hell of encoding issues definitely makes it clear that if you are using Windows, encoding can be a disaster with R. For me personally, I actually looked at a different flavor of R released from TIBCO called TERR:

because so much of base-r’s encoding issue were just such a hassle to mess with. These issues while small, I think almost sent a message regarding R’s core: it is simply not a stable foundation. Working with functions and not trusting the output can be extremely hindering from a development point of view. The tidyverse definitely has a lot to offer for newcomers with new packages being developed, but I think this raises up a good question: should you be using R in production pipelines, or even for analysis purposes? Everything seems to be so smooth when you don’t hit any of these issues, but once you do, it’s a true pain to debug, especially for a novice to the language.

purr map vs dplyr: Riding the line

purrr at first sight seems like an ideal package, but can be hard to work with if you don’t have the right use case. In our example, we had URL’s that we want to apply a function over.

However, while purrr is designed to apply functions to objects, most times we are simply interested in applying a function such as converting POSIX to datetime via a predicate function:

library(lubridate)

library(dplyr)

x <- data.frame(date=as.POSIXct("2010-12-07 08:00:00", "2010-12-08 08:00:00"),

orderid =c(1,2))

str(x)

x <- mutate_if(x, is.POSIXt, as.Date)

str(x)Purrr really shines when you need to iterate over elements with custom functions, but can be hard to come to grips if all you have done is using a mutate_if in your workflow. On the contrary, it can also be just as hard trying to figure out what these newer functions in dplyr can do for you, if you have never seen them in action.

R has good merits with being a functional programming language: it allows you to operate on data with ease, and there is plenty of functions at your disposal to do so. If anything, one of the biggest challenges I faced as I wrote this article is just where exactly to start when looking for a solution: R Manual, stack overflow, a particular package, or even the RStudio Community. In the right hands, and with the right skillset, R can be a powerful tool when put to good use, but is not for the faint of heart. What do you think? Do you think R is powerful enough for your everyday tasks, or do you think base-r feels unstable at times?